Floodplain pain

Few things knock the gravy off my biscuits faster than finding the perfect site only to toggle on the FEMA floodplain overlay to discover that the tract is positively riddled with special flood hazard area.

It's a familiar culprit for turning an "oh yes" to an "aw naw".

Impact on development

Is floodplain always a deal killer? Absolutely not.

Is a bunch of it? Sometimes no, but quite often yes.

Developing in the FEMA-designated floodplain often requires significant site work at significant expense to change the water flow, reduce flood impacts, and raise the elevation of building sites above the Base Flood Elevation (BFE). And all of that must not adversely affect drainage patterns / increase flood levels of neighboring properties. This process tends not to be short on development expense, studies, or red tape and will involve sign off from FEMA. First conditionally before development, then after with a CLOMR / LOMR (respectively) to comply with National Flood Insurance Program (NFIP) requirements.

The floodplain area of a property, when substantial, often has to be discounted so much that the likelihood of a land owner and developer seeing eye-to-eye on a value seems to be much rarer than not.

Finding sites that work

The big determinants for whether or not a site with floodplain will be acceptable for a given development use case include:

- Particular development use case

- Size of tracts

- Floodplain area

- Floodplain orientation

- Elevation deficiency (site elevation relative to Base Flood Elevation)

- Alternative uses of floodplain

- Expense of remediation

- Sales price

When searching for land for a given development use case, it's not unreasonable to assign a specific threshold of floodplain that you're willing to entertain.

If a Master Planned Community developer is looking at two 1,000 acre tracts, one with 18% floodplain and the other with 80% floodplain, it's unlikely they'd chase the second.

When on the hunt for those sites with high probability of success, filtering on a floodplain threshold can weed out many of the candidates that are likely to be unfeasible.

I don't know of a tool that can do that filtering.

Let's build it.

Special flood hazard area

The "floodplain" we've been talking about, for the most part, has been a reference to the more specific Special Flood Hazard Area (SFHA).

An area having special flood, mudflow or flood-related erosion hazards and shown on a Flood Hazard Boundary Map (FHBM) or a Flood Insurance Rate Map (FIRM) Zone A, AO, A1-A30, AE, A99, AH, AR, AR/A, AR/AE, AR/AH, AR/AO, AR/A1-A30, V1-V30, VE or V. The SFHA is the area where the National Flood Insurance Program's (NFIP's) floodplain management regulations must be enforced and the area where the mandatory purchase of flood insurance applies. For the purpose of determining Community Rating System (CRS) premium discounts, all AR and A99 zones are treated as non-SFHAs.

FEMA’s Special Flood Hazard Areas are specific zones (often referred to as the 100-year floodplain) where there’s at least a 1% chance of flooding in any given year. They typically come with higher flood insurance requirements and stricter building regulations.

In contrast, other floodplain areas might face lower flood risk (like the 0.2% chance or “500-year floodplain”), and therefore often have fewer or no mandatory requirements—though they can still flood, just less frequently according to FEMA’s estimates.

Fetching FEMA data

We'll use the requests library to fetch FEMA features from their different different MapServer layers and then geopandas to packages the results into geodataframes.

I have found the FEMA MapServer to be pretty fickle compared to others. Often ESRI MapServers have an advertised maximum record count of 1,000 or 2,000 features. Most times I find 500 - 1,000 to be the effective limit and generally default to 500 per call.

With the FEMA server I may be able to fetch those 500 records, but other times can only fetch 10 - and sometimes not that. (Instead I'm met with a 500 Error). Flood hazard areas are both complex and large, which I'm sure contributes to this significantly. Perhaps other reasons too.

Either way. Here's my function for fetching the data. I've had good results with this.

def fetch_all_features(url, params):

"""

Fetch all features from a paginated API. Makes multiple requests if the number of features exceeds maxRecordCount of a single call.

If a 500 error occurs, the function retries with a reduced resultRecordCount of 10,

and if that also fails, retries with a resultRecordCount of 1. Each retry waits for 2 seconds.

Args:

url (str): The API endpoint URL.

params (dict): The query parameters for the API call, including 'resultRecordCount'.

Returns:

list: A list of all features retrieved from the API.

"""

all_features = []

max_record_count = int(params.get("resultRecordCount", 50)) # Default to 50 if not specified

retry_attempt = 0 # Tracks the retry stage (0 = default, 1 = 10, 2 = 1)

# Initialize resultOffset if not already present or is empty

if not params.get("resultOffset"):

params["resultOffset"] = 0

while True:

response = requests.get(url, params=params)

if response.status_code == 200:

data = response.json()

if "features" in data and data["features"]:

all_features.extend(data["features"])

if len(data["features"]) < max_record_count:

break

# Update params for the next page

params["resultOffset"] = int(params.get("resultOffset", 0) or 0) + max_record_count

else:

break

elif response.status_code == 500:

if retry_attempt == 0:

print("Received 500 error. Retrying with a smaller resultRecordCount of 10.")

time.sleep(2) # Wait 2 seconds before retrying

params["resultRecordCount"] = 10

max_record_count = 10

retry_attempt = 1

elif retry_attempt == 1:

print("Received 500 error again. Retrying with the smallest resultRecordCount of 1.")

time.sleep(2) # Wait 2 seconds before retrying

params["resultRecordCount"] = 1

max_record_count = 1

retry_attempt = 2

else:

print("Error 500 persisted even after retries.")

return None

else:

print(f"Error {response.status_code}: {response.text}")

return None

return all_features

Fetch FEMA FIRM panel IDs

The primary layer we'll be working with, the Flood Hazard Zones layer, is massive. It will most likely need to be filtered for most use case. For me, in particular, I'm focused on floodplain in Texas.

The attributes that we can filter on, however, are kind of sparse. (There is not a state field for example, and passing a bounding box capturing Texas to the endpoint would both be imprecise and too large to be most helpful).

However, the layer does contain DFIRM_ID that we can use to filter on specific DFIRMS (or Digital Flood Insurance Rate Maps). In Texas at least, these FIRM panels don't uncommonly correspond to county lines. Other times they're study areas that don't correspond to a particular county.

But either way, they are a more bitesize geographic filter that we can use. To get the DFIRM_ID values that we'll need to pass to the Flood Hazard Zones layer, we'll query the FIRM Panels layer.

# Define State FIPS code for Texas

texas_fips = 48

# URL for querying the FEMA Flood Hazard Zones

url = "https://hazards.fema.gov/arcgis/rest/services/FIRMette/NFHLREST_FIRMette/MapServer/1/query?"

# Define the parameters for the query

params = query_params = {

"where": f"ST_FIPS = '{texas_fips}'",

"outFields": "DFIRM_ID",

"returnGeometry": "false",

"f": "json",

"maxRecordCount": 500,

}

# Fetch all data using pagination if needed

features = fetch_all_features(url, params)

# Generate list of unique DFIRM_IDs

dfirm_ids = list({feature['attributes']['DFIRM_ID'] for feature in features})

# Combine the list of dfirm_ids into an SQL-compatible string

dfirm_ids_str = ", ".join([f"'{dfirm_id}'" for dfirm_id in dfirm_ids])Here I'm fetching all of the FIRM panel IDs for Texas. The result looks something like this.

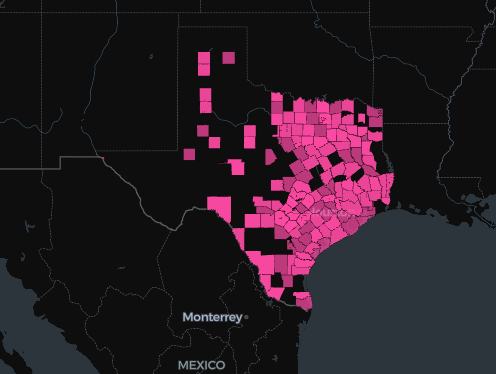

Visualize NFHL availability boundaries

Let's look at the areas that those FIRM panels represent. To do that, we'll pass those dfirm_ids to the National Flood Hazard Layer Availability layer.

# URL for querying the FEMA Flood Hazard Zones

url = "https://hazards.fema.gov/arcgis/rest/services/FIRMette/NFHLREST_FIRMette/MapServer/0/query?"

# Define the parameters for the query

params = query_params = {

"where": f"STUDY_ID IN ({dfirm_ids_str})",

"outFields": "*",

"returnGeometry": "true",

"f": "geojson",

"maxRecordCount": 500,

}

# Fetch all data using pagination if needed

features = fetch_all_features(url, params)

# Generate list of unique DFIRM_IDs

nfhl_availability = gpd.GeoDataFrame.from_features(features, crs="EPSG:4326")We'll produce a GDF with the results and inspect it with the (amazing IMO) lonboard library. We'll start by using their simple viz function.

viz(nfhl_availability)

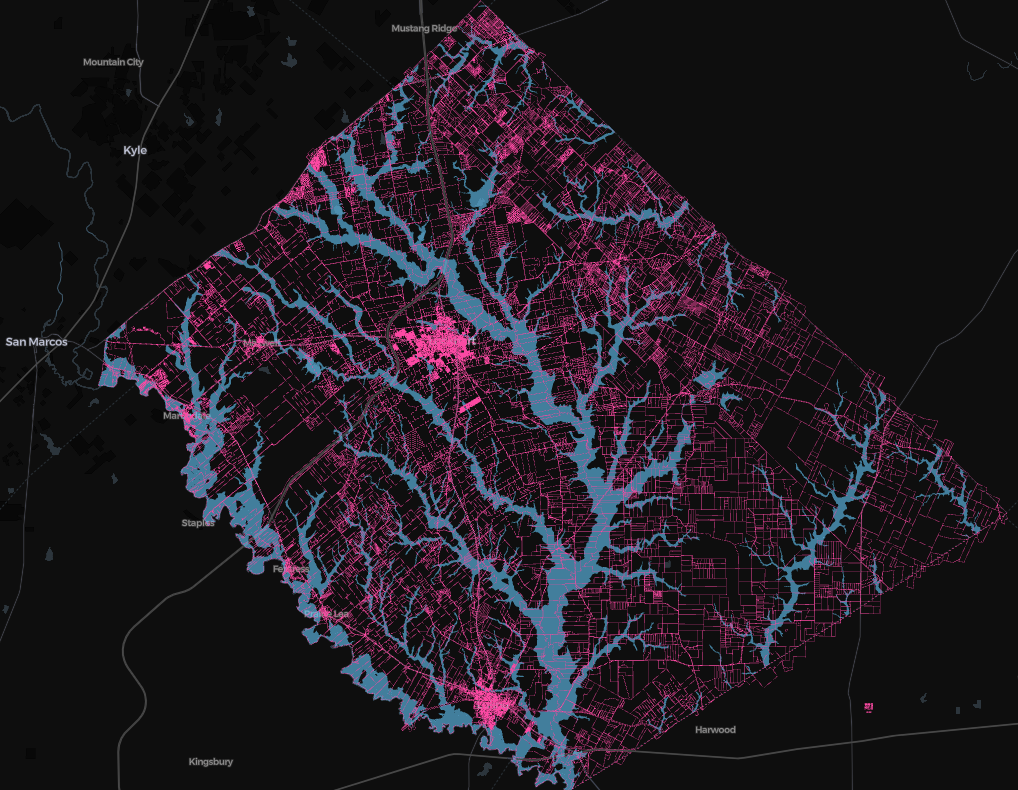

This is where flood hazard data will be available for us. Far from all of Texas, but it does have most of what we need.

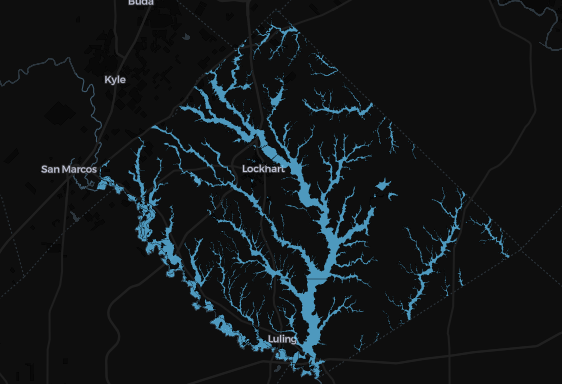

Fetching flood hazard zones

Now let's fetch the actual flood hazard area we're interested in. For this write up, we'll isolate our example to a single county - Caldwell.

I'll quickly hardcode only the Caldwell County DFIRM_ID to our dfirm_ids list.

# Hard-code just Caldwell County DFIRM ID for testing

dfirm_ids = ['48055C']Then we'll fetch the flood hazard area.

A couple notes:

- This code works its way through a single DFIRM, fetching all features, then moves on to the next. (In our example we only have one)

- We're only fetching Special Flood Hazard Area, using the argument

SFHA_TF = 'T' - There are more params here than I'd normally pass. I felt like I saw fewer 500 errors when I was more explicit and fetched only what I needed.

Here it is:

# URL for querying the FEMA Flood Hazard Zones

base_url = "https://hazards.fema.gov/arcgis/rest/services/FIRMette/NFHLREST_FIRMette/MapServer/20/query"

all_features = []

for dfirm in dfirm_ids:

where_clause = f"DFIRM_ID = '{dfirm}' AND SFHA_TF = 'T'"

params = {

"where": where_clause,

"text": "",

"objectIds": "",

"time": "",

"timeRelation": "esriTimeRelationOverlaps",

"geometry": "",

"geometryType": "esriGeometryEnvelope",

"inSR": "",

"spatialRel": "esriSpatialRelIntersects",

"distance": "",

"units": "esriSRUnit_Foot",

"relationParam": "",

"outFields": "DFIRM_ID,FLD_ZONE",

"returnGeometry": "true",

"returnTrueCurves": "false",

"maxAllowableOffset": "",

"geometryPrecision": "",

"outSR": "",

"havingClause": "",

"returnIdsOnly": "false",

"returnCountOnly": "false",

"orderByFields": "",

"groupByFieldsForStatistics": "",

"outStatistics": "",

"returnZ": "false",

"returnM": "false",

"gdbVersion": "",

"historicMoment": "",

"returnDistinctValues": "false",

"resultOffset": "",

"resultRecordCount": "500",

"returnExtentOnly": "false",

"sqlFormat": "none",

"datumTransformation": "",

"parameterValues": "",

"rangeValues": "",

"quantizationParameters": "",

"featureEncoding": "esriDefault",

"f": "geojson"

}

# Fetch all data using pagination if needed

features = fetch_all_features(url=base_url, params=params)

# Extend the master list with new features

if features:

print(f'FIRM panel {dfirm} has {len(features)} features.')

all_features.extend(features)

else:

print(f'No features for FIRM panel {dfirm}.')Let's viz it up.

# Convert the GeoJSON data to a GeoDataFrame

sfha = gpd.GeoDataFrame.from_features(all_features, crs="EPSG:4326")

viz(sfha)

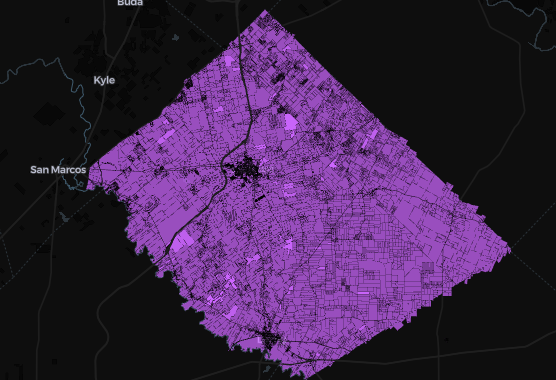

Corresponding land parcels

Now recall, my goal here is to evaluate floodplain in the context of land parcels. So I'll need to pull in the respective land parcels as well in preparation for that next step.

Calculating floodplain by parcel

Here's the good stuff.

For each land parcel, we'll calculate the absolute and relative special flood hazard area. (Will take a min to run).

# Define a projected CRS

projected_crs = "EPSG:32615"

# Reproject both GeoDataFrames to the projected CRS

parcels_projected = parcels.to_crs(projected_crs)

sfha_projected = sfha.to_crs(projected_crs)

nfhl_availability_projected = nfhl_availability.to_crs(projected_crs)

# Spatial intersection to determine if parcels fall within NFHL availability areas

nfhl_intersection = gpd.overlay(parcels_projected, nfhl_availability_projected, how='intersection')

# Add a flag field to indicate if the parcel falls within an NFHL availability area

parcels_projected['within_nfhl'] = parcels_projected['geometry'].apply(

lambda geom: any(nfhl_intersection['geometry'].intersects(geom))

)

# Reproject parcels back to the original CRS after adding the flag

parcels['within_nfhl'] = parcels_projected['within_nfhl']

# Spatial intersection to get overlapping areas between parcels and SFHA

intersection_projected = gpd.overlay(parcels_projected, sfha_projected, how='intersection')

# Calculate the absolute SFHA area for each intersected geometry (in square meters)

intersection_projected['sfha_area_sqm'] = intersection_projected.geometry.area

# Aggregate SFHA areas by parcel ID

sfha_area_per_parcel = intersection_projected.groupby('Prop_ID')['sfha_area_sqm'].sum().reset_index()

# Calculate the total area of each parcel in the projected CRS (in square meters)

parcels_projected['total_area_sqm'] = parcels_projected.geometry.area

# Extract the total area and within_nfhl flag back into the original CRS parcels GeoDataFrame

parcels['total_area_sqm'] = parcels_projected['total_area_sqm']

parcels['within_nfhl'] = parcels_projected['within_nfhl']

# Merge the SFHA area data back into the original parcels GeoDataFrame

parcels = parcels.merge(sfha_area_per_parcel, on='Prop_ID', how='left').fillna(0)

# Ensure 'sfha_area_sqm' exists after the merge

if 'sfha_area_sqm_y' in parcels.columns:

parcels.rename(columns={'sfha_area_sqm_y': 'sfha_area_sqm'}, inplace=True)

# Convert areas to acres (1 acre = 4,046.8564224 square meters)

parcels['total_area_acres'] = parcels['total_area_sqm'] / 4046.8564224

parcels['sfha_area_acres'] = parcels['sfha_area_sqm'] / 4046.8564224

# Fix sfha_percentage calculation to avoid NaN or inf and round to 2 decimal places

parcels['sfha_percentage'] = parcels.apply(

lambda row: round((row['sfha_area_sqm'] / row['total_area_sqm'] * 100), 2) if row['total_area_sqm'] > 0 else 0,

axis=1

)Now let's map it.

parcel_layer = PolygonLayer.from_geopandas(

parcels,

get_line_width=20, # width in default units (meters)

line_width_min_pixels=0.2, # minimum width when zoomed out

get_fill_color=[252,73,163,0],

get_line_color= [252,73,163],

)

flood_layer = PolygonLayer.from_geopandas(

sfha,

get_fill_color=[102, 204, 255, 150],

)m = Map(layers=[flood_layer, parcel_layer], basemap_style=basemap.CartoBasemap.DarkMatter, _height=800)

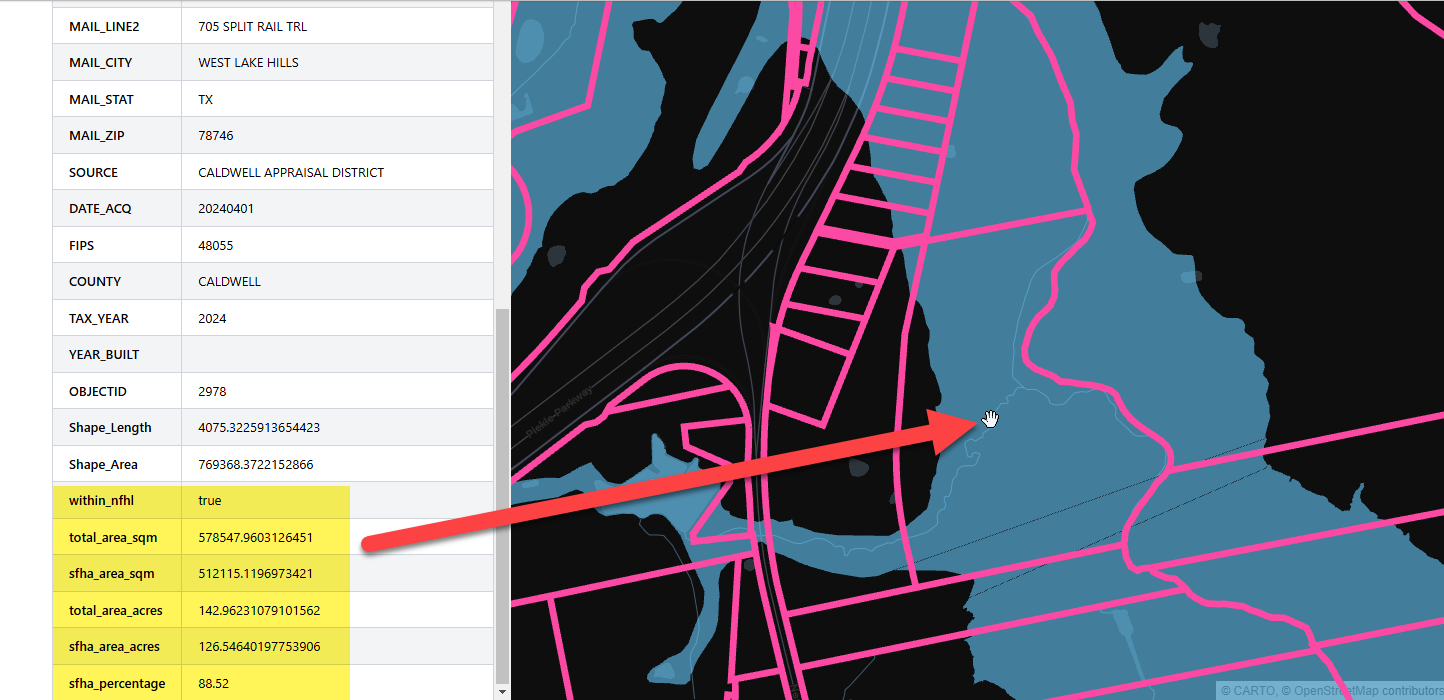

m

And a closer look, at this parcel for example, reveals ± 126 acres of SFHA for this ± 142 acre tract shaking out to a Special Flood Hazard Area percentage of 88.52%.

Probably a pass for many use cases.

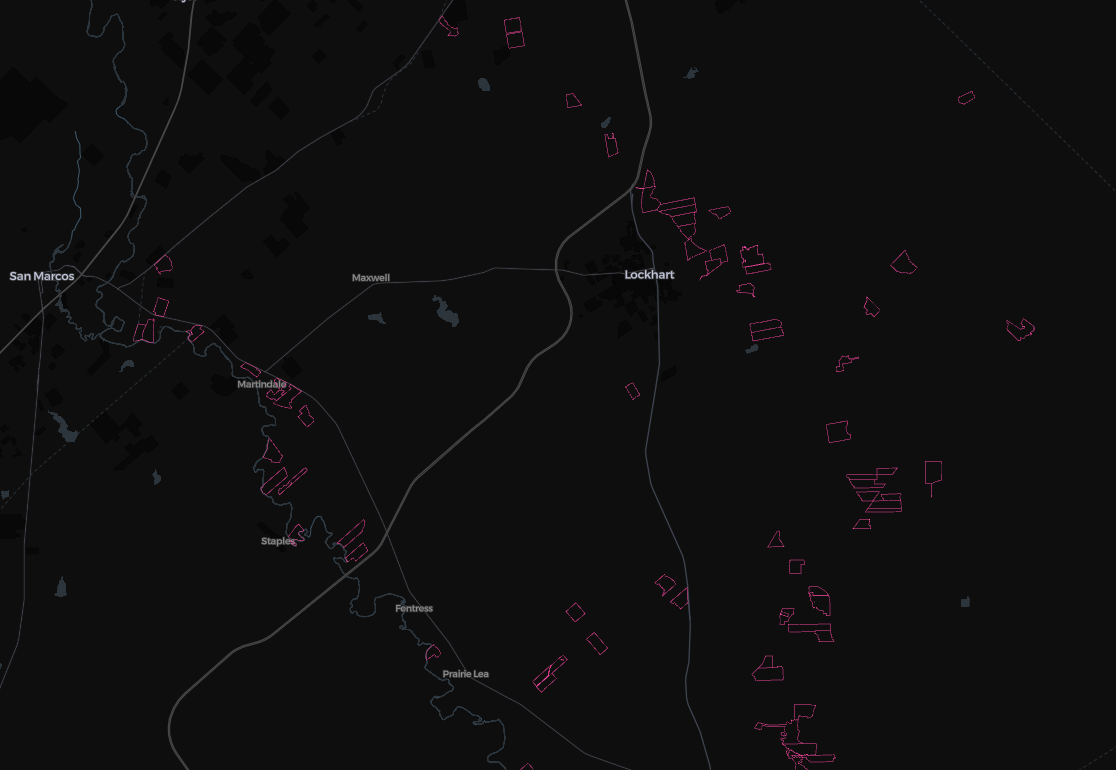

Now let's say we wanted to identify some parcels to avoid. Let's say our criteria include:

- Parcels between 50 - 200 acres

- A SFHA threshold of 80%

filtered_parcels = parcels[

(parcels['sfha_percentage'] >= 80) &

(parcels['total_area_acres'].between(50, 200))

]Here are those parcels we'd avoid.

In short, this combined with other criteria can help DQ the opportunities that are less likely to be feasible and can expedite the site selection process. Saving precious time and misses.

In the coming weeks I plan to publish a Fused UDF that can accept a users geodataframe and return that GDF with relative and absolute special flood hazard measures. 🍻.